Support Vector Machines

Mathematics behind

Prerequisites:

1. Some knowledge of vectors and linear algebra.

2. Lagrange Multipliers

3. Analytical and learning attitude

Analogy:

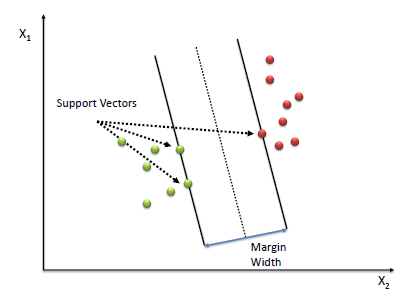

The main concept or intuition behind SVM is to find the widest separation between two classes, here red and green dots.

Let ‘m’ be a hyper-plane separating the two classes and ‘w’ be a vector which is perpendicular to the hyper-plane.

‘xr’ be a vector representing the one of the red sample, let us say red sample in the above picture.

‘xg’ be a vector representing the one of the green sample, let us say red sample in the above picture.

For an unknown sample u we take its projection on vector w which is perpendicular to hyper-plane and make an assumption that it should be greater than some value c to be a positive sample.

Our decision condition becomes:

w.u ≥ c

w.u + b ≥ 0 where b = -c

Constraint:

For xr at the margin width w.x +b ≥ 1;

For xg at the margin width w.x +b ≤ -1.

Combined equation can be written as y.(w.x +b) ≥1 where y = 1 for xr and y =-1 for xg.

Equation for width

Width of the margin can be represented as ( xr-xg ).w/|w| i.e. dot product of difference of (xr and xg ) and unit vector perpendicular to hyper-place.

If we maximise the width function then we are through.

Boundary condition i.e. at the margin xr and xg:

y(w.xr + b) = 1

w.xr +b = 1

w.xr = 1 -b

y(w.xg + b) = 1

-w.xg -b =1

-w.xg = 1+b

width = (xr-xg).w/|w|

width = (w.xr -w.xg)/|w|

width = 2/|w|

Now to maximise width => minimise |w| => min (0.5 * |w|²)

* * min (0.5 * |w|²) this transformation is done because it is more convenient in further calculation.

Lagrange Multipliers

Using Lagrange multipliers we find the maximum width i.e. min (0.5 * |w|²)

constraint y(w.xr + b) -1 ≥ 0

L = 0.5 * |w|² — Σai.[yi(w.xi +b) -1]

we differentiate L w.r.t w and equate it to zero we get:

w = Σai.yi.xi

we differentiate L w.r.t b and equate it to zero we get:

Σai.yi = 0

Decision condition becomes:

Σai.yi.xi.u +b ≥ 0 then a red class.

Kernel Trick

A dataset which is linearly separable is hardly encountered in practical scenarios to overcome this drawback the dataset is transformed to new dimension in which it becomes linearly separable. This transformation is known as kernel trick.

We have seen that the classifier depends only the dot product of the two datapoint vectors.

Kernel is function that transforms xi.xj to Φ(xi) . Φ(xj)

In case of queries please feel free to reach me here.

Reference:-

1. https://www.youtube.com/watch?v=_PwhiWxHK8o

2.https://en.wikipedia.org/wiki/Support-vector_machine

3.https://www.researchgate.net/publication/221621494_Support_Vector_Machines_Theory_and_Applications